It took 40 years, 1,000 scientists, $1 billion, and as one scientist put it, a string of program officers who often “had a tough time explaining to people why they would back such a crazy thing” for researchers to detect gravitational waves last year. This milestone was heralded by the scientific community as a monumental discovery that helped validate Einstein’s theory of relativity and opened the door to theoretical and empirical advances in science. But it will take many more years for us to see—and measure—all the benefits of this discovery.

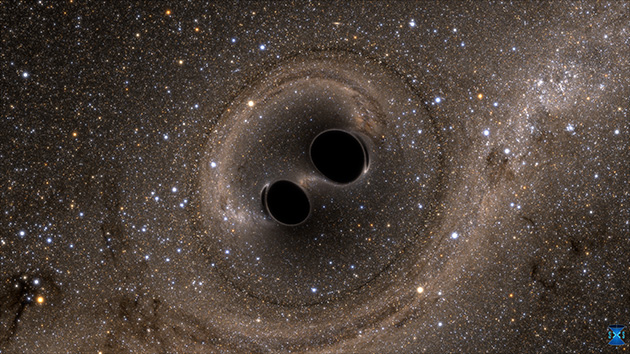

The collision of two black holes—an event detected for the first time ever by the Laser Interferometer Gravitational-Wave Observatory, or LIGO—is seen in this still from a computer simulation. LIGO detected gravitational waves, or ripples in space and time, generated as the black holes merged. Photo Credit: Simulating eXtreme Spacetimes (SXS) Project

Basic science research—and the philanthropists, foundations, and government agencies that support it—is a crucial catalyst of advances in scientific knowledge. Every year, billions of dollars are spent all over the world to support basic science research and train the next generation of scientists. But donors face difficult decisions when they make substantial investments in work that takes place over a long period of time—and that takes even longer for its effects to be realized and measured. Even when the effects are measured, it is hard to understand exactly what made these discoveries possible. Was it a brilliant mind that would have succeeded eventually, whether helped by funding or not? Did a particular experience, relationship, funding source, or series of opportunities—planned or fortuitous—make a discovery possible? Do we even need to understand the cause-and-effect relationship, or can funders and the research community be content knowing that an experience, a relationship, a particular grant, or other opportunities helped in some way to make these discoveries possible?

The fact is, as a broad community of researchers, scientists, and others yearning for better understanding of the world around us, we need to do a better job of assessing whether investments in science research are working. Our toolkit of social science methodologies includes useful approaches—techniques to randomize access to research opportunities as a way of measuring future impacts on the knowledge base, for example. These approaches are often not feasible, however (randomization is rarely possible in research programs), or they fail to address some of the issues inherent in any efforts to foster discoveries, such as planning to measure a potential outcome that can’t be known in advance.

Several foundations are paying close attention to these problems, which will be the focus of discussions at the meeting of the American Evaluation Association this Saturday. At 9:15 a.m., a panel on evaluating investments in basic science includes representatives from the Moore Foundation, Wellcome Trust, and Mathematica, who will give an overview of issues in the field of measurement and evaluation, discuss current practices, and identify the stubborn limitations of ongoing efforts to assess investments in basic science. Earlier that morning, an 8:00 a.m. panel organized by the new Evaluation and Assessment Capabilities unit of the National Science Foundation will focus on recent efforts to support and enhance monitoring and evaluation activities.

Basic science research is firmly grounded in our desire to advance our understanding of our world and improve our lives. These goals are too important for us to rely on efforts that are surrounded by so many unanswered questions. I hope you’ll join us for what promises to be a lively discussion this weekend and beyond as we wrestle with these problems together.